One eventful day in our company, the operations and maintenance team alerted us to a concerning issue: Our pod was restarted 8 times per day, which triggered an urgent investigation.

Upon viewing the Kibana log, we discovered that the JVM wasn’t throwing any errors, yet the service was consistently being restarted without warning. It became evident that the process was abruptly terminated. Our initial assessment pointed towards the pod hitting its memory limit and being terminated by Kubernetes’ out-of-memory (OOM) mechanism.

In this article, we will delve into the process of troubleshooting this perplexing issue, offering insights into our approach and the steps taken to identify and resolve the root cause

Problem Description

The operations team informed us that our pod was restarting 8 times a day, prompting an investigation. Despite checking the Kibana log, we found no JVM errors, but the service kept restarting unexpectedly.

Our initial assessment suggests the pod hit its memory limit and was killed by Kubernetes’ out-of-memory (OOM) feature. This seems odd since we set the memory limits higher than the configured settings. We need to dig deeper to understand what’s causing this issue.

Troubleshooting Process

Initial positioning

First, I asked my colleague for a description of the pod. The key information is here.

Containers:

container-prod--:

Container ID: --

Image: --

Image ID: docker-pullable://--

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 05 Feb 2024 11:40:01 +0800

Last State: Terminated

Reason: Error

Exit Code: 137

Started: Fri, 05 Feb 2024 11:27:38 +0800

Finished: Fri, 05 Feb 2024 11:39:58 +0800

Ready: True

Restart Count: 8

Limits:

cpu: 8

memory: 6Gi

Requests:

cpu: 100m

memory: 512Mi

You’ll notice in the logs the “Last State: Terminated” with “Exit Code: 137”. This code indicates that the pod process was forcefully terminated by SIGKILL. Typically, this occurs when the pod exceeds its memory limit and Kubernetes intervenes.

As a result, the decision is to temporarily increase the pod’s memory limit in the production environment to stabilize the service. Following this, we’ll investigate the excessive off-heap memory usage within the pod to address the root cause of the issue.

Further Analysis

However, feedback from the operations and maintenance team revealed that expanding the pod’s memory limit was not feasible due to the host’s memory already being at 99% capacity. Upon further examination, it was observed through pod memory monitoring that the pod was killed when it had only consumed around 4G of memory, well below its 6G upper limit.

This raises the question: why was the pod terminated before reaching its memory limit? Seeking answers, I turned to Google and stumbled upon some clues:

- Typically, if a pod exceeds its memory limit and gets terminated, the pod description will show Exit Code: 137, with the Reason being OOMKilled, not Error.

- When the host’s memory is full, Kubernetes triggers a protective mechanism to evict some pods and free up resources.

The puzzling aspect remains: why is this particular pod being evicted repeatedly while other services in the cluster remain unaffected?

Puzzle Solved

In the end, Google gave the answer:

Why my pod gets OOMKill (exit code 137) without reaching threshold of requested memory

The author in the link encountered the same situation as me. The pod was killed before it reached the memory limit, and also:

Last State: Terminated

Reason: Error

Exit Code: 137

What is k8s QoS?

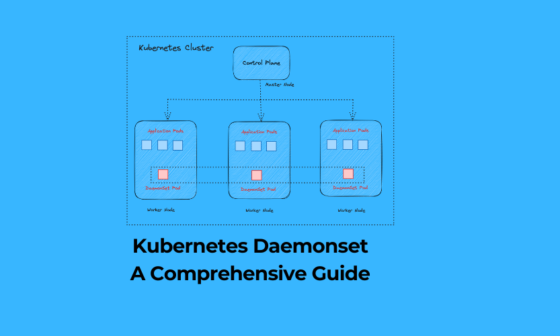

QoS stands for Quality of Service, a metric used by Kubernetes (k8s) to evaluate the resource usage quality of each pod. It plays a crucial role in Kubernetes’ decision-making process regarding pod eviction when node resources are depleted. You can find the official description of QoS here.

Kubernetes categorizes pods based on the resource limits specified in the pod description file.

| QoS | condition |

|---|---|

| Guaranteed | 1. All containers in the pod must set CPU and memory requests and limits. 2. The CPU and memory requests set by all containers in the pod must be equal to the limits set by the container (the containers themselves are equal, and different containers can be different) |

| Burstable | 1. The pod does not meet the Guaranteed conditions. 2. At least one container has a CPU or memory request or limit set. |

| BestEffort. | All containers in the pod do not have any resource requests or limits set. |

When node resources become depleted, Kubernetes (k8s) will prioritize killing pods to free up resources based on the following order: BestEffort -> Burstable -> Guaranteed.

Reviewing the pod description provided by the operations and maintenance team, we can observe the resource limits specified for this pod:

Limits:

cpu: 8

memory: 6Gi

Requests:

cpu: 100m

memory: 512Mi

Clearly, it adheres to the Burstable standard.

Therefore, in situations where the host memory is depleted and other services are Guaranteed, Kubernetes will continue to terminate the pod to free up resources, regardless of whether the pod itself has reached the 6G memory limit.

When the QoS is the same, what priority should be used to Evict?

Upon discussions with the operations and maintenance team, we discovered that the configuration, limits, and requests of all pods in our cluster vary. Surprisingly, all pods are categorized as Burstable. This begs the question: why is only this particular pod repeatedly evicted while others remain unaffected?

Even within the same Quality of Service (QoS) category, there are distinct eviction priorities. To understand this further, we referred to the official documentation.

The most crucial paragraph from the official documentation regarding the eviction mechanism when node resources are depleted is as follows:

If the kubelet can ‘t reclaim memory before a node experiences OOM, the

oom_killercalculates anoom_scorebased on the percentage of memory it’s using on the node, and then adds theoom_score_adjto get an effectiveoom_scorefor each container. It then kills the container with the highest score .This means that containers in low QoS pods that consume a large amount of memory relative to their scheduling requests are killed first.

Put simply, the criteria for pod eviction is determined by the oom_score. Each pod is assigned an oom_score, calculated based on the ratio of the pod’s memory usage to the total memory, plus the oom_score_adj value of the pod.

The oom_score_adj value is an offset value computed by Kubernetes (k8s) based on the pod’s Quality of Service (QoS). The calculation method is as follows:

| QoS | oom_score_adj |

|---|---|

| Guaranteed | -997 |

| BestEffort | 1000 |

| Burstable | min(max(2, 1000 – (1000 × memoryRequestBytes) / machineMemoryCapacityBytes), 999) |

From this table, we observe the following:

The priority is initially determined by the overall QoS, such as BestEffort -> Burstable -> Guaranteed.

When both pods have Burstable QoS, the pod with the highest ratio of actual memory usage to the pod’s requested memory will be prioritized for eviction.

Summarize

At this juncture, we can confidently identify the reason behind the repeated restarts of the Pod:

- The memory of the Kubernetes (k8s) node host reaches full capacity, prompting Node-pressure Eviction.

- Following the priority of Node-pressure Eviction, k8s selects the pod with the highest oom_score for eviction.

- Given that all pods are categorized as Burstable and have the same memory request set at 512M, the pod with the highest memory usage will have the highest oom_score.

- This particular service consistently exhibits the highest memory usage among all pods. Consequently, during each calculation, this pod ultimately ends up being terminated.

So how to solve it?

- The host memory needs to be expanded, otherwise things like killing pods are unavoidable and it’s just a matter of which one to kill.

- For key service pods, set the request and limit to be exactly the same, and set the pod’s QoS to Guaranteed to reduce the chance of the pod being killed as much as possible.

🔥 [20% Off] Linux Foundation Coupon Code for 2024 DevOps & Kubernetes Exam Vouchers (CKAD , CKA and CKS) [RUNNING NOW ]

Save 20% on all the Linux Foundation training and certification programs. This is a limited-time offer for this month. This offer is applicable for CKA, CKAD, CKS, KCNA, LFCS, PCA FINOPS, NodeJS, CHFA, and all the other certification, training, and BootCamp programs.

$395 $316

- Upon registration, you have ONE YEAR to schedule and complete the exam.

- The CKA exam is conducted online and remotely proctored.

- To pass the exam, you must achieve a score of 66% or higher.

- The CKAD Certification remains valid for a period of 3 years.

- You are allowed a maximum of 2 attempts to take the test. However, if you miss a scheduled exam for any reason, your second attempt will be invalidated.

- Free access to killer.sh for the CKAD practice exam.

CKAD Exam Voucher: Use coupon Code TECK20 at checkout

$395 $316

- Upon registration, you have ONE YEAR to schedule and complete the exam.

- The CKA exam is conducted online and remotely proctored.

- To pass the exam, you must achieve a score of 66% or higher.

- The CKA Certification remains valid for a period of 3 years.

- You are allowed a maximum of 2 attempts to take the test. However, if you miss a scheduled exam for any reason, your second attempt will be invalidated.

- Free access to killer.sh for the CKA practice exam.

CKA Exam Voucher: Use coupon Code TECK20 at checkout

$395 $316

- Upon registration, you have ONE YEAR to schedule and complete the exam.

- The CKA exam is conducted online and remotely proctored.

- To pass the exam, you must achieve a score of 67% or higher.

- The CKS Certification remains valid for a period of 2 years.

- You are allowed a maximum of 2 attempts to take the test. However, if you miss a scheduled exam for any reason, your second attempt will be invalidated.

- Free access to killer.sh for the CKS practice exam.

CKS Exam Voucher: Use coupon Code TECK20 at checkout

Check our last updated Kubernetes Exam Guides (CKAD , CKA , CKS) :

Conclusion

I trust that this process will aid you in troubleshooting analogous issues in your everyday work utilizing Kubernetes.