Welcome to GKE Standard vs Autopilot Post , as the popularity of containerized applications continues to rise, Kubernetes has become the go-to platform for managing and deploying them.

However, its complexity and steep learning curve have deterred many organizations from adopting it. But with the introduction of Autopilot mode for Google Kubernetes Engine (GKE), Google has made Kubernetes more accessible and manageable for everyone.

In this blog post, we’ll dive into GKE Standard vs Autopilot , and help you choose the best mode for your organization based on your unique workload and priorities.

What’s Kubernetes

Kubernetes, also referred to as “kube” or “k8s,” stands out as a powerful open-source container orchestration platform. Its primary function revolves around automating the intricate procedures involved in deploying and scaling containerized applications.

What’s GKE ?

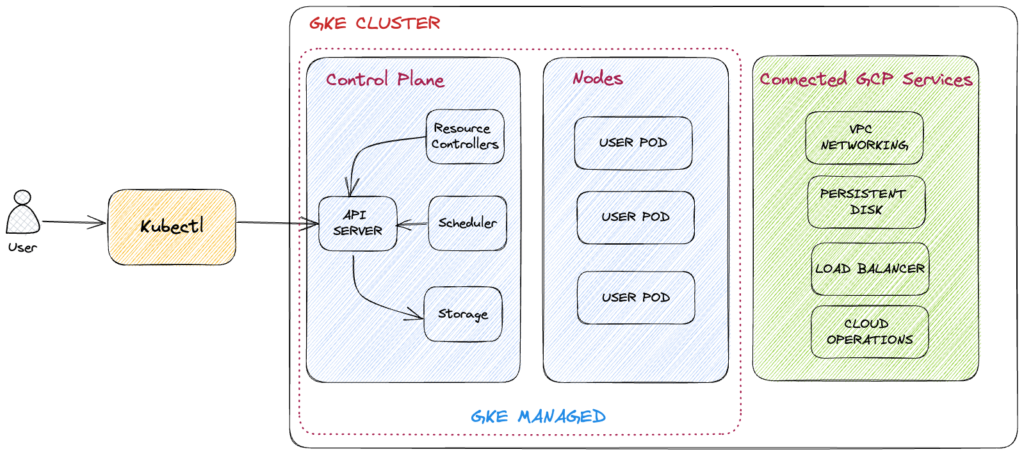

The Google Kubernetes Engine, also known as GKE, is a managed Kubernetes service offered by Google in the cloud. GKE utilizes a cluster setup comprising multiple compute engine instances grouped together. Creating a Kubernetes cluster on GKE can be done through the Google Cloud console or the ‘gcloud’ command from the Cloud Software Development Kit (SDK).

Customization options for GKE clusters include varying machine types, node quantities, and network configurations.

Kubernetes facilitates interaction with your cluster, enabling deployment and management of applications, execution of administrative tasks, establishment of policies, and monitoring of workload health.

Running a GKE cluster provides access to advanced cluster management features from Google Cloud, such as load balancing for compute engine instances and the use of node pools.

GKE provides several benefits to users, including

- Scalability: GKE allows you to easily scale your application as your traffic grows, without worrying about the underlying infrastructure.

- Reliability: GKE provides built-in high availability features that ensure your applications are always up and running.

- Security: GKE offers advanced security features, such as network policy enforcement and pod security policies, to help protect your applications and data.

- Ease of use: GKE’s user-friendly interface makes it easy to deploy and manage applications on a Kubernetes cluster.

- Cost efficiency: GKE offers several cost-saving features, such as node auto-scaling and preemptible VMs, which help reduce the overall cost of running your applications.

Tips

GKE has limitations and challenges, like any technology. Although it has a user-friendly interface, some users may still face a learning curve, and it may not always be the most cost-effective option for every application. Users should understand both the benefits and potential drawbacks of using GKE to make informed decisions about its suitability.

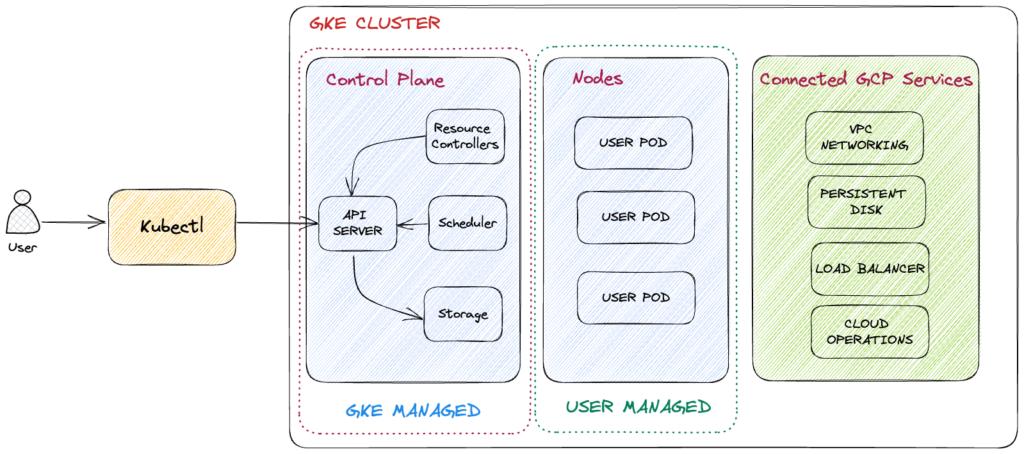

There are two types of clusters available in Google Kubernetes Engine (GKE):

- Autopilot

As an opinionated approach, Google Cloud presents Autopilot mode as the ultimate solution that offers a fully managed and provisioned cluster configuration with pre-defined options. Autopilot clusters come with an optimized configuration that is tailored to meet the requirements of production workloads.

- Standard

This mode provides greater flexibility in configuring the underlying infrastructure of the cluster. In Standard mode, you determine the necessary configurations for your production workloads.

Get Started with GKE

In this section, we will create a Google Kubernetes Engine cluster containing several containers, each containing a web server. we will place a load balancer in front of the cluster and view its contents.

To proceed with these steps, you need to fulfill the following prerequisites:

- Access to a Google Cloud Console account. If you don’t have one, you can sign up for a free trial account

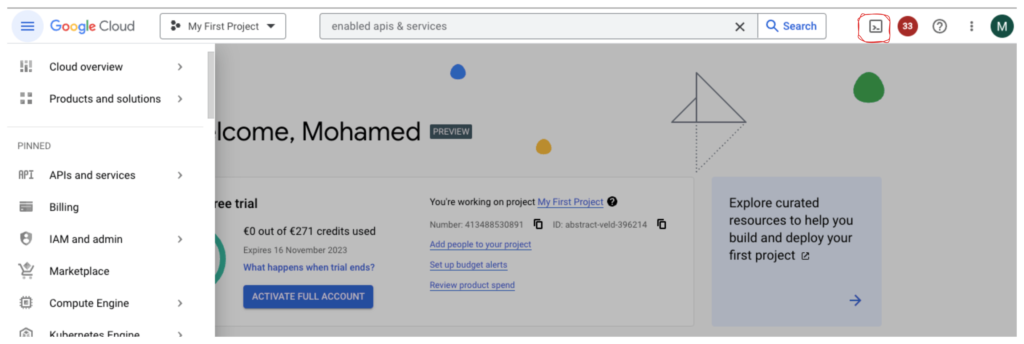

Task 1 : Enable needed API

- In the Google Cloud Console, on the Navigation menu (), click APIs & Services.

- Scroll down in the list of enabled APIs, and confirm that both of these APIs are enabled:

- Kubernetes Engine API

- Container Registry API

In case either API is not present, navigate to “Enable APIs and Services” located at the top. Search for the mentioned APIs by their names and activate each one for your ongoing project

Task 2 : Start a Kubernetes Engine cluster

In Google Cloud console, on the top right toolbar, click the Activate Cloud Shell button.

Click Continue.

At the Cloud Shell prompt, type the following command to export the environment variable called MY_ZONE.

export MY_ZONE="europe-west9-a"Start a Kubernetes cluster managed by Kubernetes Engine. Name the cluster my-first-gke and configure it to run 2 nodes:

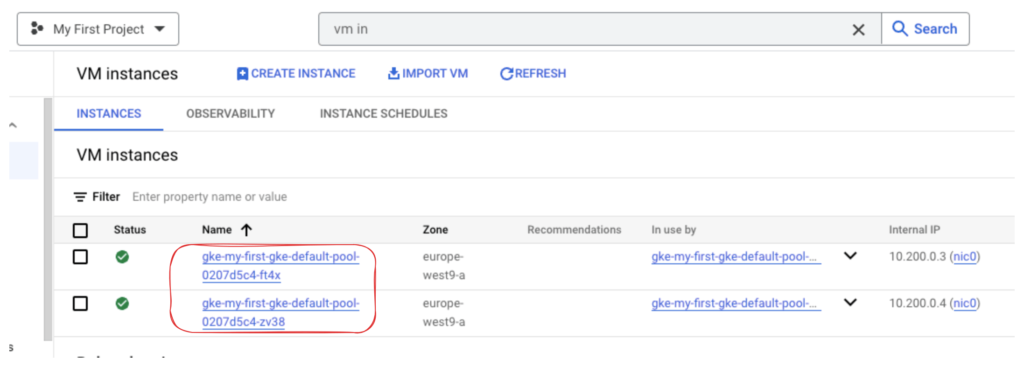

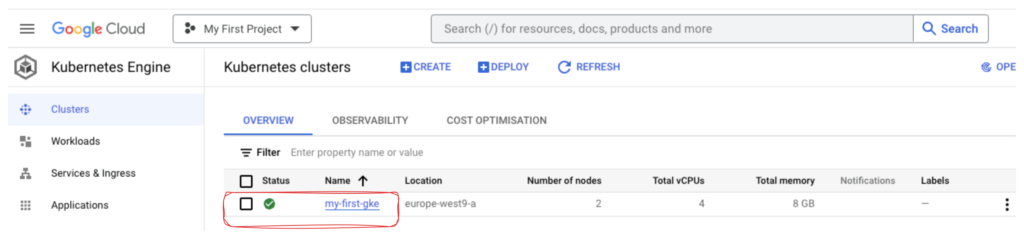

gcloud container clusters create my-first-gke --zone $MY_ZONE --num-nodes 2The process of creating a cluster involves Kubernetes Engine setting up virtual machines for you, which typically takes several minutes.

Creating cluster my-first-gke in europe-west9-a... Cluster is being health-checked (master is healthy)...done.

Created [https://container.googleapis.com/v1/projects/abstract-veld-396214/zones/europe-west9-a/clusters/my-first-gke].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/europe-west9-a/my-first-gke?project=abstract-veld-396214

kubeconfig entry generated for my-first-gke.

NAME: my-first-gke

LOCATION: europe-west9-a

MASTER_VERSION: 1.27.3-gke.100

MASTER_IP: 34.163.54.88

MACHINE_TYPE: e2-medium

NODE_VERSION: 1.27.3-gke.100

NUM_NODES: 2

STATUS: RUNNINGAfter the cluster is created, check your installed version of Kubernetes using the kubectl version command:

The gcloud container clusters create command automatically authenticated kubectl for you.

View your running nodes in the GCP Console. On the Navigation menu (

Your Kubernetes cluster is now ready for use.

Task 3 : Run and deploy a container

From your Cloud Shell prompt, launch a single instance of the nginx container. (Nginx is a popular web server.)

kubectl create deploy nginx --image=nginx:1.25.2

Within Kubernetes, containers operate within pods. The application of the ‘kubectl create’ command resulted in the creation of a deployment containing a solitary pod that houses the nginx container.

View the pod running the nginx container:

kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7b66446657-smzkr 1/1 Running 0 23sExpose the nginx container to the Internet:

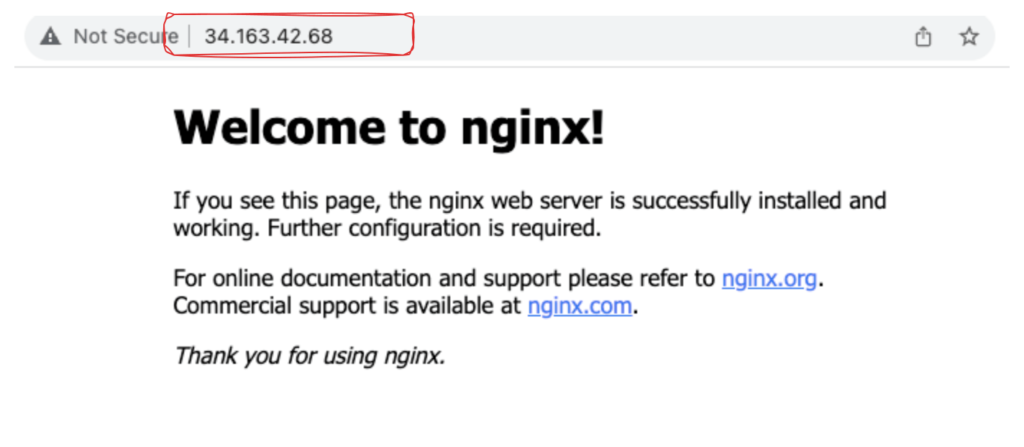

kubectl expose deployment nginx --port 80 --type LoadBalancerKubernetes generated both a service and an external load balancer connected to a public IP address. This IP address remains constant throughout the service’s lifespan. Any network traffic directed to this public IP address is automatically directed to the pods linked to the service, specifically in this instance, the nginx pod.

View the new service:

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.36.0.1 <none> 443/TCP 30m

nginx LoadBalancer 10.36.6.220 34.163.42.68 80:30174/TCP 2m46sYou can use the displayed external IP address to test and contact the nginx container remotely.

Open a new web browser tab and paste your cluster’s external IP address into the address bar. The default home page of the Nginx browser is displayed.

Scale up the number of pods running on your service:

kubectl scale deployment nginx --replicas 3

Confirm that Kubernetes has updated the number of pods:

kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7b66446657-h5htb 1/1 Running 0 29s

nginx-7b66446657-smzkr 1/1 Running 0 25m

nginx-7b66446657-tqv7x 1/1 Running 0 29sWell done!

In this section , you successfully set up a Kubernetes cluster within Kubernetes Engine. You populated the cluster with multiple pods containing an application, exposed this application, and effectively scaled the said application.

GKE Standard vs Autopilot

Autopilot in GKE takes care of various complexities associated with your cluster’s lifecycle. The table below illustrates the available options based on the cluster’s mode of operation:

Pre-configured: This setting is inherent and cannot be modified. Default: This setting is enabled by default, but you have the ability to override it. Optional: This setting is disabled by default, but you have the option to enable it.

| Options | Autopilot mode | Standard mode |

|---|---|---|

| Basic cluster type | Availability and version: Pre-configured: Regional Default: Regular release channel | Availability and version: Optional: Regional or zonalRelease channel or static version |

| Nodes and node pools | Managed by GKE. | Managed, configured, and specified by you. |

| Provisioning resources | GKE dynamically provisions resources based on your Pod specification. | You manually provision additional resources and set overall cluster size. Configure cluster autoscaling and node auto-provisioning to help automate the process. |

| Image type | Pre-configured: Container-Optimized OS with containerd | Choose one of the following: Container-Optimized OS with containerdContainer-Optimized OS with DockerUbuntu with containerdUbuntu with DockerWindows Server LTSCWindows Server SAC |

| Billing | Pay per Pod resource requests (CPU, memory, and ephemeral storage) | Pay per node (CPU, memory, boot disk) |

| Security | Pre-configured: Workload IdentityShielded nodesSecure bootOptional: Customer-managed encryption keys (CMEK)Application-layer secrets encryptionGoogle Groups for RBAC | Optional: Workload IdentityShielded nodesSecure bootApplication-layer secrets encryptionBinary authorizationCustomer-managed encryption keys (CMEK)Google Groups for RBAC |

| Networking | Pre-configured: VPC-native (alias IP)Maximum 32 Pods per nodeIntranode visibilityDefault: Public clusterDefault CIDR ranges Note: Ensure that you review your CIDR ranges to factor in expected cluster growth.Network name/subnetOptional: Private clusterCloud NAT1 (private clusters only)Authorized networks | Optional: VPC-native (alias IP)Maximum 110 Pods per nodeIntranode visibilityCIDR ranges and max cluster sizeNetwork name/subnetPrivate clusterCloud NAT1Network policyAuthorized networks |

| Upgrades, repair, and maintenance | Pre-configured: Node auto-repairNode auto-upgradeMaintenance windowsSurge upgrades | Optional: Node auto-repairNode auto-upgradeMaintenance windowsSurge upgrades |

| Authentication credentials | Pre-configured: Workload Identity | Optional: Compute Engine service accountWorkload Identity |

| Scaling | Pre-configured: Autopilot handles all the scaling and configuring of your nodes. Default:You configure Horizontal pod autoscaling (HPA)You configure Vertical Pod autoscaling (VPA) | Optional: Node auto-provisioningYou configure cluster autoscaling.HPAVPA |

| Logging | Pre-configured: System and workload logging | Default: System and workload logging Optional: System-only logging |

| Monitoring | Pre-configured: System monitoring Optional: System and workload monitoring | Default: System monitoring Optional: System and workload monitoring |

| Routing | Pre-configured: Pod-based routing. Network endpoint groups (NEGs) enabled. | Choose node-based packet routing (default), or Pod-based routing. |

| Cluster add-ons | Pre-configured: HTTP load balancingDefault: Compute Engine persistent disk CSI DriverCompute Engine Filestore CSI DriverNodeLocal DNSCacheOptional: Managed Anthos Service Mesh (Preview)Istio (Use Managed Anthos Service Mesh (Preview)) | Optional: Compute Engine persistent disk CSI DriverCompute Engine Filestore CSI DriverHTTP load balancingNodeLocal DNSCacheCloud BuildCloud RunCloud TPUConfig ConnectorManaged Anthos Service MeshKalmUsage metering |

In this section, we will provide a comprehensive rating based GKE Standard vs Autopilot, covering essential GKE features and highlighting differences between these two modes of operation.

A rating system based on * typically indicates a scale from 1 to 5, where 1 is the lowest and 5 is the highest rating possible. The number of stars represents the level of excellence or quality in a given product, service, or experience, with 5 stars being the best and 1 star being the worst.

| Criteria | GKE Autopilot | GKE Standard |

|---|---|---|

| K8s & GKE skills required | ***** | *** |

| Control over the infrastructure | ** | **** |

| Cluster management time | **** | *** |

| Time-to-market | ***** | *** |

| Automation | **** | *** |

| Security | *** | *** |

| Pricing | *** | *** |

| Reliability | ***** | *** |

| Customer experience (CX) | ***** | **** |

| Partner workload integration | *** | ***** |

Sure, let’s explain the ratings for each criteria in detail:

- K8s & GKE skills required

Autopilot is rated 5 stars for “K8s & GKE skills required” as it reduces the need for specialized skills in Kubernetes and GKE. Autopilot manages many aspects of the cluster, such as scaling and security patches, reducing manual intervention. This makes it easy for teams with limited expertise to manage their clusters and focus on core functions.

In contrast, GKE Standard requires more expertise than Autopilot for tasks such as upgrades, security patches, and node scaling. This can be time-consuming, requiring knowledge of Kubernetes and GKE, leading to a rating of 3 stars for “K8s & GKE skills required”.

- Control over the infrastructure

For “Control over the infrastructure,” Autopilot has a rating of 2 stars because it abstracts much of the underlying infrastructure from the user, limiting their granular control. Autopilot manages the infrastructure and configuration of the control plane, including the API server, etcd database, and controller manager, reducing user control. Additionally, SSH access to nodes is restricted for security purposes, further limiting control.

In contrast, Standard mode has a 4-star rating, providing a higher degree of control, allowing users to customize the control plane and network policies for specific needs. This flexibility allows for fine-tuning infrastructure to specific use cases.

- Cluster management time

GKE Standard and Autopilot are both effective in reducing ongoing cluster management time.

GKE Standard receives a rating of *** for cluster management due to its ability to manage several ongoing activities in the cluster lifecycle, such as monitoring, tuning, right-sizing, and auto-repairing. This results in up to 75% reduction in cluster management time for organizations.

However, GKE Autopilot outperforms GKE Standard with a higher rating of **** for cluster management. With Autopilot, Google manages nodes and creates new ones for apps while configuring automatic upgrades and repairs. As a result, organizations can benefit from an 83% reduction in ongoing cluster management time.

- Time To Market

GKE Autopilot earns a ***** rating for its ability to speed up build and deployment processes, streamline Kubernetes operations, and reduce developer impacts. Forrester Research found that companies using Autopilot saw a 45% improvement in developer productivity, thanks to Google managing node pools and nodes, simplifying resource provisioning, scaling, maintenance, and security.

As a result, Autopilot customers deploy containerized applications 2.6x faster than the competition. In contrast, GKE Standard is rated *** as it lacks the streamlined operations and developer impacts provided by Autopilot, making it less ideal for faster time-to-market.

- Automation

Automation is a key criterion when it comes to choosing a Kubernetes management platform, and GKE Autopilot excels in this regard. Google manages nodes, creating new ones for applications and configuring automatic upgrades and repairs, which greatly reduces the overhead of manual maintenance tasks. GKE Autopilot also automatically scales nodes and workloads based on traffic, further reducing manual intervention required for managing Kubernetes clusters.

This level of automation not only saves time but also improves the reliability of the platform, as there is less room for human error. Therefore, GKE Autopilot is rated **** for automation. GKE Standard, on the other hand, is rated *** for automation as it provides some level of automation, but not to the extent of Autopilot’s streamlined operations.

- Security:

Autopilot and Standard mode provide a decent level of security for Kubernetes clusters, rated at 3 stars. Autopilot mode manages upgrades and security patches, limits SSH access to nodes, and abstracts the infrastructure from the user to reduce potential attack surfaces.

In contrast, Standard mode provides more control but requires users to take proactive security measures, which demands more expertise and attention to detail. Although both modes have the same security rating, Autopilot is an excellent choice for less experienced teams due to its reduced need for security expertise.

- Pricing

Autopilot’s pay-as-you-go pricing model is highly cost-effective for Kubernetes deployment as it charges only for the resources used by the workloads, thus reducing operational costs. Moreover, Autopilot eliminates the need for continuous Kubernetes cost optimization efforts and reduces the need for specific Kubernetes expertise to get started.

This pricing model may not be suitable for workloads with fluctuating resource requirements as it can result in unpredictable pricing fluctuations.

GKE Standard’s sustained use pricing model may be more cost-effective for workloads with high numbers of long-running nodes, as the pricing gradually decreases over time as the nodes continue to run. Additionally, utilizing preemptible node pools in GKE Standard can also be a cost-effective option for CI/CD workloads. However, GKE Standard still requires expertise for workload optimization.

Overall, both GKE Standard and Autopilot offer cost-effective pricing options with their unique pricing models, therefore, both GKE Standard and Autopilot receive a rating of 3 stars for pricing.

- Reliability

Autopilot has a ***** reliability rating because it provides a workload-level SLA backed by Google SRE, automatic provisioning and scaling of resources, and flexible maintenance options. This results in higher uptime and better results, making Autopilot a more reliable choice for Kubernetes. GKE Standard has a rating of *** in comparison.

- Customer experience (CX)

GKE Autopilot offers seamless and user-friendly Kubernetes cluster deployment and management, eliminating the need for manual intervention. With automatic cluster configuration, management, and infrastructure scaling, customers can adopt Kubernetes with confidence.

The platform also features simplified monitoring and logging, cluster autoscaling, and Google SRE monitoring, resulting in a superior customer experience. GKE Autopilot receives a ***** rating, while GKE Standard receives a **** rating.

- Partner workload integration

Both GKE Autopilot and GKE Standard provide support for integrating with partner workloads, such as Istio and Anthos. However, GKE Standard provides more flexibility and customization options for integrating with partner workloads. Therefore, GKE Standard receives a higher rating of ***** compared to GKE Autopilot’s rating of ***.

To obtain the list of partners authorized to use GKE Autopilot, please refer to this documentation: https://cloud.google.com/kubernetes-engine/docs/resources/autopilot-partners.

🔥 [20% Off] Linux Foundation Coupon Code for 2024 DevOps & Kubernetes Exam Vouchers (CKAD , CKA and CKS) [RUNNING NOW ]

Save 20% on all the Linux Foundation training and certification programs. This is a limited-time offer for this month. This offer is applicable for CKA, CKAD, CKS, KCNA, LFCS, PCA FINOPS, NodeJS, CHFA, and all the other certification, training, and BootCamp programs.

$395 $316

- Upon registration, you have ONE YEAR to schedule and complete the exam.

- The CKA exam is conducted online and remotely proctored.

- To pass the exam, you must achieve a score of 66% or higher.

- The CKAD Certification remains valid for a period of 3 years.

- You are allowed a maximum of 2 attempts to take the test. However, if you miss a scheduled exam for any reason, your second attempt will be invalidated.

- Free access to killer.sh for the CKAD practice exam.

CKAD Exam Voucher: Use coupon Code TECK20 at checkout

$395 $316

- Upon registration, you have ONE YEAR to schedule and complete the exam.

- The CKA exam is conducted online and remotely proctored.

- To pass the exam, you must achieve a score of 66% or higher.

- The CKA Certification remains valid for a period of 3 years.

- You are allowed a maximum of 2 attempts to take the test. However, if you miss a scheduled exam for any reason, your second attempt will be invalidated.

- Free access to killer.sh for the CKA practice exam.

CKA Exam Voucher: Use coupon Code TECK20 at checkout

$395 $316

- Upon registration, you have ONE YEAR to schedule and complete the exam.

- The CKA exam is conducted online and remotely proctored.

- To pass the exam, you must achieve a score of 67% or higher.

- The CKS Certification remains valid for a period of 2 years.

- You are allowed a maximum of 2 attempts to take the test. However, if you miss a scheduled exam for any reason, your second attempt will be invalidated.

- Free access to killer.sh for the CKS practice exam.

CKS Exam Voucher: Use coupon Code TECK20 at checkout

Check our last updated Kubernetes Exam Guides (CKAD , CKA , CKS) :

Check latest Kubernetes Exam (CKAD , CKA and CKS) Voucher Coupons

Which Mode is Right for You?

Simply Google believes Autopilot is the best cluster mode for most Kubernetes use cases.

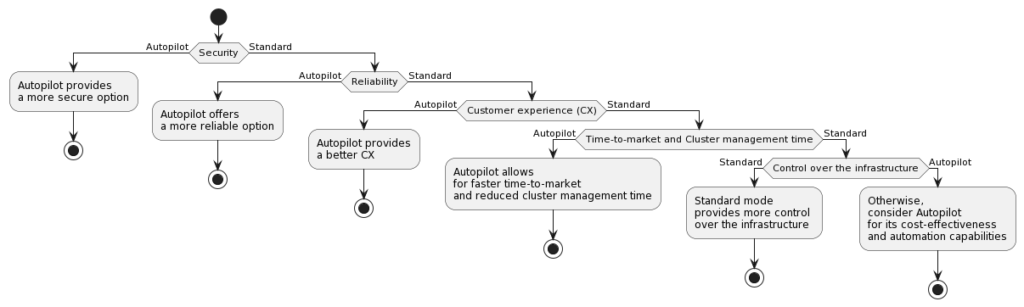

Let’s examine this decision flow based on criteria explained in the latter section :

GKE decision flow

The decision flow provides guidelines for organizations to choose between GKE Autopilot and Standard based on their needs. The criteria are arranged in order of importance, with the most critical at the top.

Security is the top priority when choosing between Autopilot and Standard. Both provide high levels of security, but Autopilot has additional features that make it a better option for security-conscious organizations.

Reliability is closely tied to security and the second criterion. Autopilot’s automatic scaling and failover capabilities ensure high availability and minimal downtime, making it the better option for organizations that prioritize reliability.

Customer experience is the third criterion. Autopilot simplifies Kubernetes cluster deployment and management and provides a more user-friendly interface for workload management, making it the better option for organizations that value a positive customer experience.

Time-to-market and cluster management time are the fourth criterion. Autopilot can deploy and manage clusters faster and more efficiently than Standard mode, making it the better option for organizations that prioritize speed and efficiency.

Infrastructure control is the fifth criterion, with Standard mode offering more control than Autopilot. Organizations that require fine-grained control over their infrastructure will find Standard mode a better option.

Additional criteria like automation, pricing, and partner workload integration can help organizations make a more informed decision based on their specific needs and requirements.

The criteria mentioned in the decision flow are general guidelines that organizations can use to decide which GKE option is best for them. However, each organization’s unique requirements and priorities may impact their decision, and they should carefully evaluate each option to determine which one best meets their specific needs.

Conclusion

Autopilot mode for GKE has made Kubernetes more accessible to everyone by automating many of the tedious and error-prone tasks of Kubernetes. With Autopilot as the default and recommended mode of GKE, developers can focus on their applications instead of infrastructure management.

However, it’s important to consider the unique workload and priorities of your organization when choosing GKE Standard vs Autopilot. By weighing the pros and cons and identifying the importance of specific criteria, you can make an informed decision that best meets your needs.

Don’t miss out on the opportunity to enhance your skills and demonstrate your expertise in Kubernetes.